Publications

An End-to-End Platform for Digital Pathology Using Hyperspectral Autofluorescence Microscopy and Deep Learning Based Virtual Histology

C. McNeil, P. Wong, N. Sridhar, Y. Wang, C. Santori, C. Wu, A. Homyk, M. Gutierrez, A. Behrooz, D. Tiniakos, A. Burt, R. Pai, K. Tekiela, P. Chen, L. Fischer, E. Martins, S. Seyedkazemi, D. Freedman, C. Kim, and P. Cimermancic

Nature Modern Pathology, 2024

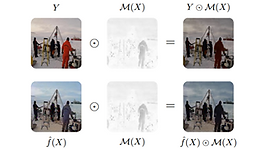

Diffusion Models for Generative Histopathology

N. Sridhar, C. McNeil, M. Elad, E. Rivlin, and D. Freedman

International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI) Workshop on Deep Generative Models for Medical Image Computing and Computer Assisted Intervention (DGM4MICCAI), 2023

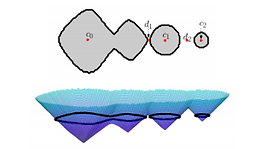

Designing Nonlinear Photonic Crystals for High-Dimensional Quantum State Engineering

E. Rozenberg, A. Karnieli, O. Yesharim, J. Foley-Comer, S. Trajtenberg-Mills, S. Mishra, S. Prabhakar, R. Singh, D. Freedman, A. Bronstein, and A. Arie

International Conference on Learning Representations (ICLR) Workshop on Machine Learning for Materials Workshop (ML4Materials), 2023

A Machine Learning Approach to Generate Quantum Light

E. Rozenberg, A. Karnieli, O. Yesharim, J. Foley-Comer, S. Trajtenberg-Mills, S. Mishra, S. Prabhakar, R. Singh, D. Freedman, A. Bronstein, and A. Arie

International Conference on Learning Representations (ICLR) Workshop on Physics for Machine Learning Workshop (Physics4ML), 2023

Impacts of a Novel AI-Enabled Polyp Detection System: a Prospective Randomized Clinical Trial

Lachter, Y. Raz, A. Kobzan, A. Suissa, A. Bezobchuk, B. Makhoul, A. Partoush, E. Zian, R. Shalabi, N. Rabani, D. Freedman, S. Plowman, S. Schlachter, E. Rivlin, and R. Goldenberg

United European Gastroenterology Journal, 2022

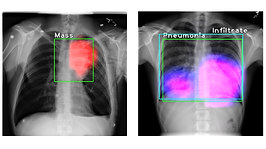

Detection of Elusive Polyps via a Large-Scale Artificial Intelligence System

D.M. Livovsky, D. Veikherman, T. Golany, A. Aides, V. Dashinski, N. Rabani, D. Ben Shimol, Y. Blau, L. Katzir, I. Shimshoni, Y. Liu, O. Segol, E. Goldin, G. Corrado, J. Lachter, Y. Matias, E. Rivlin, and D. Freedman

Gastrointestinal Endoscopy, 2021

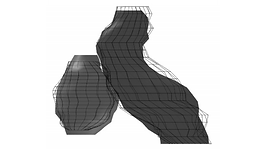

Accurate, Robust, and Flexible Real-time Hand Tracking

T. Sharp, C. Keskin, D. Robertson, J. Taylor, J. Shotton, D. Kim, C. Rhemann, I. Leichter, A. Vinnikov, Y. Wei, D. Freedman, P. Kohli, E. Krupka, A. Fitzgibbon, and S. Izadi

ACM CHI Conference on Human Factors in Computing Systems (CHI), 2015